Hi there!

This is one for the nerds

A couple of days ago @shensley and I talked about the vague possibility of automated CI tests on actual hardware; that is: running some automated tests on an actual Daisy Seed whenever someone makes a pull request.

It sounded like a pipe dream to me, but the more I think about it, the more I’m starting to like the idea. It sounds like a fun challenge to set this up, but it would also be very benefitial for the project. I think it would remove A LOT of work going forward - painful manual testing of SPI, I2C, audio, etc. could be entirely replaced with automated tests. I haven’t seen anything like this on another open source “hardware” project before, but imagine how cool it would be!  If that doesn’t make your heart beat fast with excitement, I don’t know what will

If that doesn’t make your heart beat fast with excitement, I don’t know what will

In this thread, I’d like to write down some of the thoughts I had. It would be wonderful if some other people would chime in on this idea. It’s a lot of work for a single person, but we can nicely split this into smaller chunks and spread the work across many shoulders.

So, here’s what I have in mind:

- A raspberry pi (or a similar low-spec computer) is connected to one or more Daisy Seeds. The Seeds are connected in various ways (audio loopback, SPI-interconnection, maybe even additional hardware/chips, such as shift registers, etc.). The whole assembly could just be a breadboard + the raspberry pi mounted on a wooden board.

- The Raspberry Pi runs a small Jenkins Server. This server is accessible 24/7 from the web via dynDNS. I’ve set something like this up before, and its actually very easy to do. Eventually, it would be a normal web server URL like this: https://libdaisy-hardware-test.electro-smith.com

- On this Jenkins instance, we have a special build job, that can be triggered from our github actions in the libDaisy repo (e.g. with this plugin).

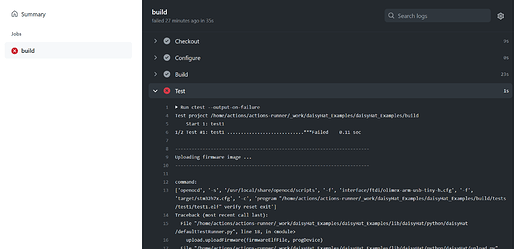

- This job clones the libDaisy repo and starts executing tests on the Daisy Seed(s). If the test succeed, the Jenkins Job succeeds, which in turn reports back to our github actions job in libDaisy.

With this taken care of, here’s what would happen when a pull request is made:

- The github actions in the libDaisy repo connect to the raspberry pi via the known URL, e.g. https://libdaisy-hardware-test.electro-smith.com and start the job. The job parameters are the libDaisy repo URL and the commit hash to build.

- The raspberrypi checks out the desired commit from the repo and starts running the tests.

- Eventually, the tests complete and the jenkins job finishes with a result.

- The github actions in the libDaisy repo collect the result and we can see a red/green test in the pull request.

As for the actual tests, here’s my ideas:

- Each test has its own directory in libDaisy, with contents like this:

-

libDaisy/.../<myTestName>/firmware/*- a normal makefile firmware project with a

main.cpp,Makefileand some other files for the test. - If multiple Daisy Seeds are involved in the test, multiple firmware-folders may exists

- a normal makefile firmware project with a

-

libDaisy/.../<myTestName>/runTest.py- This is the entry point for the test.

- The script does something like this:

- build the firmware

- flash to Daisy Seed

- send “start” command over USB-UART

- wait until result is received over USB-UART (or timeout)

-

- To make the individual tests clean and short, we could have some shared test tooling like this:

-

libDaisy/.../tooling/*- Contains helper code that’s used on the firmware side of the tests, e.g. functions for the communication with the Raspberry Pi like

waitForTestStart(),finishTest(Result::failure) - Contains helper code for the jenkins-side of the test, e.g. Python functions like

buildAndFlashFirmware(firmwarePath),runTestAndGetResult()

- Contains helper code that’s used on the firmware side of the tests, e.g. functions for the communication with the Raspberry Pi like

-

The whole setup should be easily reproducible by anyone.

- The hardware setup is clearly documented (what’s connected to what?)

- The raspberry pi setup should be available as a

setup.shscript in libDaisy. Ideally, you’d only need to boot into raspbian and execute this script; telling it the desired dynDNS URL and credentials; the script installs all dependencies and reboots the raspberry pi. Jenkins is automatically started and the dynDNS is ready to go.

I think this sounds like a fun challenge!

Who’s in? Lets go!