I have been working on a guitar overdrive pedal using Daisy Seed and the Cleveland Music Hothouse. I have run into a few issues along the way and wanted to share my solutions for them back with the community.

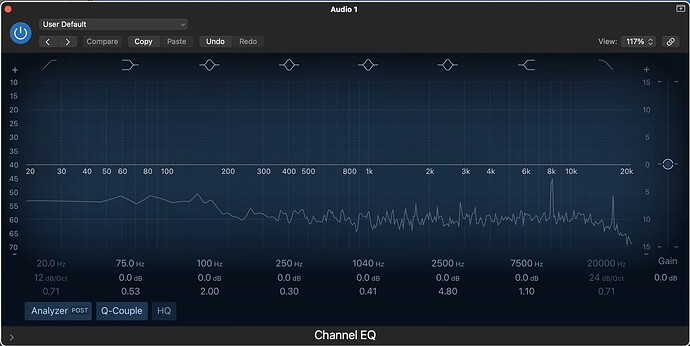

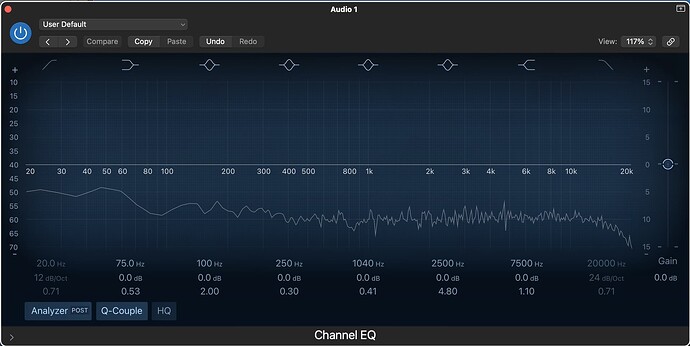

The first issue is that there is significant audible noise at the callback frequency (i.e. sample_rate/block_size) coming from the ADC when the input signal is boosted significantly, as is necessary in an overdrive pedal. This seems to be a known issue (see https://forum.electro-smith.com/t/questions-about-digital-noise-and-grounding/432/10), and I am working around it by decreasing block size to 4 with a sample rate of 96kHz to get it out of the audible range.

This seems to have caused another problem though, which is that when looking at the output on an oscilloscope there appeared to be a strange ripple in the signal, which goes away when bypassing the overdrive (in bypass I just memcpy the input to the output).

At first I thought that this may be due to instability in the potentiometer readings, but on further investigation it turned out to be related to the cpu utilization, which was surprising because my cpu usage was below 80%. My effect is oversampling the input in order to reduce aliasing due to the non-linear transformation performed by the overdrive, and halving the oversampling factor got the cpu usage below 50% and fixed the issue. This is not a satisfying solution though, because I would like to keep the higher oversampling rate and be able to use the whole CPU.

My next theory as to the cause of this was that it could be due to the timing of the transfers from the ADC and to the DAC, and this theory turned out to be correct. The Daisy Seed uses circular DMA for the transfers, with a buffer of 2x the block size. After receiving half of the buffer from ADC, the HAL_SAI_RxHalfCpltCallback function is called in an interrupt service routine, which calls some Daisy Seed internal callbacks and eventually calls the user’s audio callback. The Daisy Seed internal callback which calls the user’s audio callback converts the data in the ADC’s rx buffer from ints to floats and puts them in another buffer on the stack, and the user’s audio callback writes its output into another float buffer on the stack. After the user’s audio callback returns, the Daisy Seed internal callback converts the floats back to ints and stores them in the DAC’s tx buffer. This then happens again for the second half of the buffer with the HAL_SAI_RxCpltCallback callback, and then it loops back to the beginning of the buffer, alternately calling these two callbacks whenever one half the buffer has been completely filled.

There are four important events we need to understand the timing of in order to understand what is happening here and how to fix it. They are:

- Start time of ADC rx transfer

- End time of ADC rx transfer

- Start time of DAC tx transfer

- End time of DAC tx transfer

The audio callback needs to start after the end of the ADC rx transfer and must read all of its input before the start of the next ADC rx transfer for the same half of the buffer. It must write its result to the tx buffer before the start of the next DAC tx transfer for its half of the buffer, but it must not write any output before the end of the previous DAC tx transfer for its half of the buffer.

I measured the timing of the end time events by instrumenting HAL_SAI_RxHalfCpltCallback, HAL_SAI_RxCpltCallback, HAL_SAI_TxHalfCpltCallback, and HAL_SAI_TxCpltCallback (the latter two are not currently used by Daisy Seed but I added them for this measurement) with some code to track their relative timing. I ran this with varying block sizes, and discovered that the order and timing is:

- Tx half complete at time 0 us

- Rx half complete at time 10 us

- Tx complete at time block_size/sample_rate seconds

- Rx complete at time block_size/sample_rate seconds + 10 us

This pattern repeats indefinitely with the next iteration starting at time 2*block_size/sample_rate seconds.

Measuring the start times is trickier because there are no callbacks or diagnostics for when they occur. I was able to measure the rx start times by spin looping on the first element of the rx buffer and tracking when it changed, and comparing this time to the callback timings, and found that the ADC rx DMA transfer seems to start approximately 3-4 us before the callback is called (this may be somewhat longer with higher block sizes). The tx start times are the hardest to measure, but if my theory is correct then adding a delay to the audio callback and increasing it just until the threshold where the ripple starts to occur would allow me to measure it. I did this, and found that the ripple consistently starts to occur when the delay is around 10-15 us shorter than block_size/sample_rate seconds for various block sizes.

What is happening that is causing the ripple is the DMA transfer from the DAC’s tx buffer actually starts ~10-15 microseconds before the next ADC rx callback happens. If the previous rx callback isn’t complete by this time, you will end up transferring some of the old samples that were already in the buffer instead of the newly computed samples. This isn’t a huge deal when using a large block size because if the callbacks are only happening once a millisecond then losing 10-15 microseconds of processing time is only 1-2% of CPU, but if the callbacks are happening 24,000 times per second then you only have 41.6 microseconds between callbacks, and 10-15 microseconds translates into losing ~25-35% of your CPU!

This issue can be solved by delaying the output by block_size samples (i.e. one half of the DMA buffer). There are a few approaches I have found that appear to work:

- Store the output of the user’s audio callback into an intermediate buffer instead of the DAC tx buffer, and then copy it into the DAC tx buffer in the next call to HAL_SAI_TxHalfCpltCallback or HAL_SAI_TxCpltCallback.

- Move the call to the user’s audio callback from the HAL_SAI_Rx callbacks to the HAL_SAI_Tx callbacks. This also requires flipping which half of the buffer you read from in the audio callback, i.e. in the TxHalfCpltCallback you need to read from the back half of the rx buffer and write to the front half of the tx buffer, and vice versa for the TxCpltCallback.

With either of these approaches, I can push the audio callback up to ~37 microseconds of computation before the ripple appears, whereas without them it starts to appear around ~26 microseconds of computation.

Due to the additional delay of 1 block, this is probably not desirable across the board, but it would be nice to have an option to enable one of these approaches in libDaisy for users who are using small block sizes (where the extra delay of 1 block probably doesn’t matter anyway). I can submit a pull request for this if there is interest.